Active Full Backup vs. Synthetic Full Backup for Virtual Machines

If you’re relatively new to the IT field or you’re a small business owner interested in making sure your business will survive a data disaster event like a flood or fire, the different considerations you’ll have to look at when creating a backup strategy for virtual machines can seem overwhelming at first blush.

While there are more methods for virtual machine backup than these, I’ll focus on two of them and the pros and cons for each: active full backups and synthetic full backups. And then I’ll give a brief overview of a third method. But first, a quick breakdown of the bigger picture.

Virtual Machines Explained (Basically, it’s like this…)

Virtual machines are what they say on the tin: a virtualized computer that acts just like a normal computer and emulates physical hardware like CPUs, memory, hard drives, and so on. It is, essentially, a computer within a computer. And it’s sandboxed from its host computer such that its files and programs can’t interact with the host machine’s main operating system (OS), files, other programs, or other virtual machines that it hosts.

It’s also worth noting that when there are multiple virtual machine servers hosted on the same physical computer an additional piece of software called a hypervisor is used to manage them. The hypervisor ensures that the host computer’s resources are utilized and distributed effectively across the multiple guest virtual machines it manages. They help reduce the space, energy, and maintenance that each virtual machine requires.

Virtual machines are used to test alpha or beta software, run non-native software on other OS’s, and host multiple websites in a cost-effective way, among other things. And like all software, they need to be backed up.

Before we get to the two specific backup methodologies, there are other things you’ll have to look at as well, such as your company’s requirements for retaining data, recovery time objective (RTO), recovery point objective (RPO), and backup level. You can back up the entire host system at the physical level, back up virtual machines at the hypervisor level, or back up from within each virtual machine itself. Whichever strategy you choose, you’ll have to also choose a backup method.

Two such methods loom large in consideration: active full backup and synthetic full backup.

Active Full Backup

An active full backup produces a full backup of a virtual machine. It copies every piece of data that’s part of the virtual machine from the source server to the backup repository. This method helps if your backup repository is in heavy use or it’s generally slow. You likely won’t be straining its resources since most of the strain will be in reading data from the source.

Producing an active backup can be slow and take a long time if your host is one that hosts a lot of other virtual machines and consequently sees a lot of server utilization. Active backups can also slow down and take much longer if your network traffic is heavy.

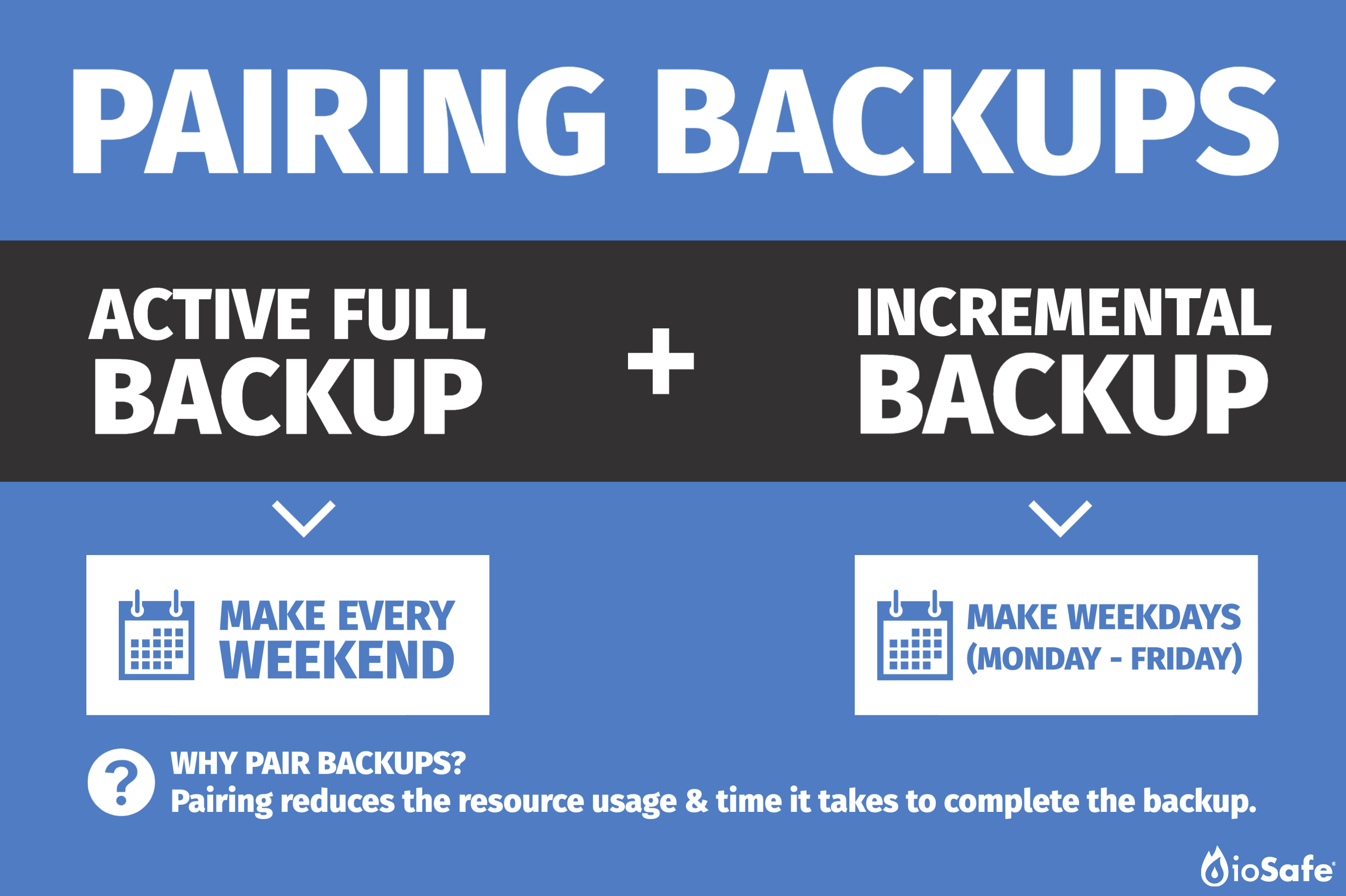

For this reason, many people pair active backups with incremental backups. Incremental backups back up only that data that has changed since the last full backup was created, reducing the resource usage and time it takes to do the backup to a mere fraction of what an active backup’s usage would be.

Most people pair them together in a way that’s similar to this: A full active backup is made each weekend, with incremental backups made on Monday through Friday. Then, the following weekend, these are discarded with the next active full backup.

So, suppose you need to restore your source after it failed on a Wednesday, then you’ll need the full backup from the weekend plus the incremental backups from Monday and Tuesday to restore your data back to where it was on Wednesday morning.

Ultimately though, whether you use active backup exclusively or active backup with incremental backup, the potential negative implications of active backup’s heavy resource usage depends greatly upon the unique characteristics of your company’s IT infrastructure and the way it’s used within the company. If your company makes use of a data deduplication appliance, you might prefer active backups.

A deduplication appliance is hardware that ensures extra copies of data don’t exist in a backup location. It increases backup efficiency by eliminating the need to recopy identical data during a backup and maximizes the storage capacity available for backups. However, they’re typically optimized for writing data and consequently slow at reading data, which means processes like synthetic backups that utilize reading data from the backup repository can take longer than backup methods like active backup that don’t read data from the backup repository. Let’s go more into more detail on synthetic backups.

Synthetic Full Backup

To create a synthetic full backup, you’ll need to start with an active full backup. Afterward, no active full backups are made. Instead, incremental backups are produced from that point onwards.

As mentioned previously, incremental backups back up only that data that has changed since the last full backup was created, reducing the resource usage and time it takes to do the backup to a mere fraction of what an active backup’s usage would be.

Unlike an active full backup with incremental backups, data is not pulled from the source to create a new full backup. Instead, after a set interval, all the incremental backups are combined with the previous full backup into a new full backup on the backup repository. Since the full backup is generated exclusively existing backups on the backup repository instead of from the source, like in an active backup, it’s called a synthetic full backup instead.

So, if we assume the same weekly backup schedule as we used in the example above, a full active backup would be made on the first weekend, with incremental backups made on Monday through Friday. Then, on the next weekend, the active backup is combined with the incremental backups to form a complete synthetic backup that is identical to the source data on the server as of Friday even though a full active backup is never taken from the source again.

The active full backup and previous incremental backups are then discarded. Then incremental backups are taken the next Monday through Friday. On the next weekend, these are combined with the previous synthetic backup to form a new up-to-date synthetic full backup. The old synthetic backup and the incremental backups are then discarded. This continues repeating.

So, supposing again that you need to restore your source after it failed on a Wednesday, you’ll also need the synthetic backup from the previous weekend plus the incremental backups from Monday and Tuesday to restore your data back to where it was on Wednesday morning.

The main difference a synthetic full backup has from an active full backup with incremental backups is that the bulk of the processing takes place on the backup repository instead of on your source. This frees up resource usage on your host/source and bypasses having to copy a large amount of data over the network that happens when you pull an active full backup. The only time network and resource usage occurs is during incremental backups which are much smaller than full backups. If your backup repository is quick or isn’t used heavily, this will have the positive effect of speeding up the time it takes to create backups.

The other difference is that after the first full active backup, you’ll never have to make another full backup from the source. This improves your recovery time because you can make the interval between full synthetic backups shorter than you could do with only creating active full backups since the majority of resource usage will be placed on the backup repository and won’t affect your source. The shorter time between full synthetic backups means you will need fewer incremental backups on average to restore your source.

In this theoretical example, let’s assume Tom is creating active full backups every Saturday and creates incremental backups on Monday through Friday. And let’s say Harry is creating synthetic full backups every Saturday. Also, because Harry doesn’t have to use resources from the source to create new backups, he also decides to create synthetic full backups on Wednesdays as well, with incremental backups being taken on Monday, Tuesday, Thursday, and Friday.

If both Tom and Harry suffer a data disaster on Friday, then to recover his data Tom has to spend time assembling a full backup from his previous active full backup the previous Saturday as well as his incremental backups that were made on Monday through Thursday. But Harry only has to use his synthetic full backup from Wednesday and his incremental backup from Thursday. Harry will be able to recover his data faster because he has less data to reassemble in order to recover it.

Of course, synthetic full backups do have the potential downside of placing most of the resource usage on the backup repository, so it’s not a good method to use if your backup repository is in frequent use or it’s slow, since either will cause backups and restoring data to take more time.

This also applies if you’re using a data deduplication appliance as your backup repository since such appliances are typically optimized to write data instead of reading data. And synthetic backups require reading the data from your previous synthetic full backup and incremental backups to create an updated synthetic full backup.

But if you live in a world where your virtual machine is hosted on a source that commonly sees a high percentage of resource usage from other virtual machines it hosts, they’re worth your time to set up and see if they work for you.

Forever Incremental Backup

One final method also worth considering is the forever-incremental backup. This method is something of a cross between an active full backup and a synthetic backup. Like both of them, an active full backup is required to begin, and then just like with a synthetic full backup, only incremental backups are taken afterward. But unlike a synthetic full backup, a full synthetic backup of the incremental backups is never assembled.

Instead, after each incremental backup is run, the data from that backup is divided into individual blocks, deduplicated, and given a unique reference number that’s kept track of via a recovery point. A recovery point is like a catalogue that keeps track of where each piece of data lives on your backup repository so that a complete backup of your source can be reassembled when you need it.

So, for a third time, let’s suppose you need to restore from your source after it failed on a Wednesday. Your backup repository will reference the recovery point you are restoring from, and then go to each individual block of data in the backup repository that’s referenced by the recovery point to reassemble your virtual machine.

Like synthetic backups, this has the benefit of not straining your source host or network traffic, but like active backups, it also has the benefit of not straining your backup repository at all until you absolutely need to restore your data. It also saves a lot more space than active and synthetic full backups since it never keeps data in incremental or synthetic backup files, but rather as deduplicated individual blocks.

Not every backup repository or software will offer this method, but for those that do, it might be the best of both worlds, depending on your needs.

There’s no one perfect solution for virtual machine backup. Like everything in life, there’s a value tradeoff to make and that means the way your IT infrastructure is used each day and your organization’s policies will largely determine which one is the best fit for you. This choice is up to you, but now you’re better equipped to make that choice.

Originally published Dec 16 2020, updated Dec 16 2020

Annie Wynn

Guest Blogger

Annie Wynn, our guest blogger for this series, has been a digital nomad for over five years, traveling the US and Canada in her Alto trailer. You can follow her adventures at www.wynnworlds.com.

Related Blog Posts

Off-Facebook Activity is Here: 3 Facebook Data Privacy Settings You Can Change Right Now

On Data Privacy Day 2020, Facebook launched a new privacy control tool for users called Off-Facebook Activity. Much like...

Power Outages are the New Normal in Our California Community

My small community—nestled in the foothills of Northern California—is one of 34 counties that experienced the first of...

Introducing the Fireproof and Waterproof ioSafe Duo

Today, we’re introducing the ioSafe Duo, a two-drive fireproof, waterproof storage device that is ideal for people who want...